Insight

Why qualitative research is critical in government

And how to make research findings actionable

This post is based on an interview with Dana Chisnell, the first head of customer experience for the Department of Homeland Security. Dana was a founding member of the U.S. Digital Service team and a co-founder of the Center for Civic Design.

While working alongside researchers and designers at Bloom Works and government agencies over the last 5 years, I’ve learned that the most effective research does more than just dig up a problem. It builds relationships and the understanding necessary to address those problems. I reflected on this theme again while in a recent chat with Bloom’s newest Design Leader in Residence, Dana Chisnell.

Dana was the first head of customer experience for the Department of Homeland Security, but she has spent most of her career trying to improve the way the public interacts with government.

As Dana explains, there are often nuanced problems that get in the way of finding or implementing the right solution. Qualitative research can be a tool to help reveal some of those nuanced problems and create meaningful change.

She also shared some tips on how both researchers and program owners can use qualitative research to make meaningful change.

Why qualitative research is critical

It reminds us we aren’t the experts

We don’t know what we don’t know. But by simply observing and listening to users in the context where they’re trying to get something done, qualitative research can help uncover insights. Researchers and designers often don’t have the lived experience that their users do.

Even if we do have comparable experience, users and participants in research are the experts on their specific experiences. As Dana explained, researcher experience and domain expertise often are not enough to close gaps for users.

Researcher experience and domain expertise often are not enough to close gaps for users.

Dana reflected on her own experience designing the first ever digital ballot interface that worked across browsers. Her team of experts used best practices to make a prototype.

“We recruited a bunch of people to do individual sessions where they interacted with this ballot as if they were voting,” but after the first few sessions, the design team realized “very quickly” that it fell flat.

“The layout was wrong, and there’s way too much in the way of wording, and it’s in the wrong place.”

She explained why it felt like such a surprise.

“There’s a temptation to just stay with the best practices because you’ve developed this expertise. But I am here to tell you that best practices bit me in the butt.”

The team didn’t defend their first prototype. And they didn’t see this as a failure. They celebrated, and they iterated on the design over the research sessions that followed.

It fills in important context

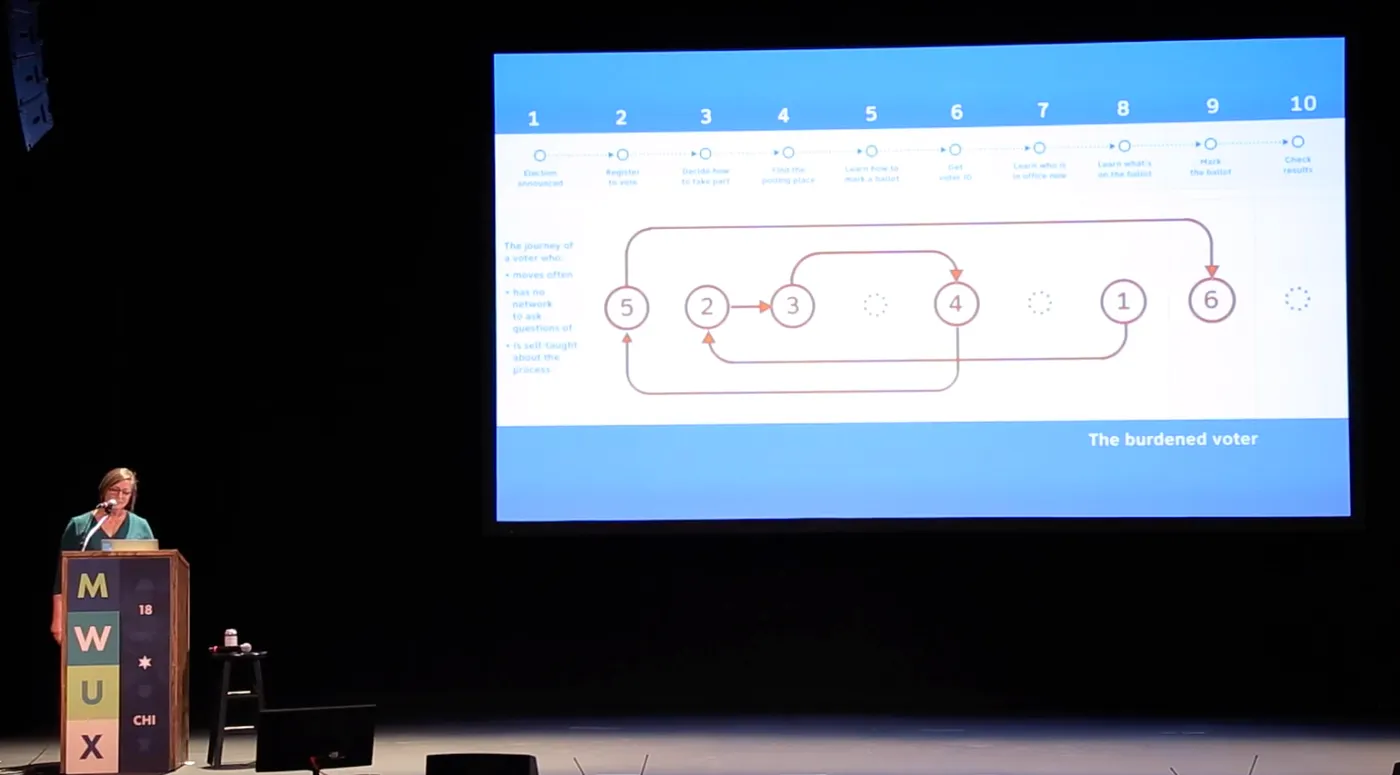

As consultants and program managers, we’re often missing important context from how the user is experiencing the tools and programs we’re working with. As Dana explains, “We can imagine the context of use, but that isn’t the same as seeing it, hearing it, feeling it.”

When somebody is using a digital tool or applying for a government benefit, “so often we think about it being a one-to-one relationship.” For example, one person is signing up online to get a benefit. But “this is almost never the case,” says Dana.

“It is almost always a person in various different kinds of relationships who might be supporting other people, or might be getting support from those other people.”

In 2024, there were 5 major hurricanes in the United States. Immediately following those hurricanes, a customer experience team at the Federal Emergency Management Agency (FEMA) went out into the field. They took the deputy chief of staff of the agency with them.

“When you’re in the field you can see both how your personnel are interacting with those survivors and what kind of information survivors are coming away with…. By doing that research and asking those kinds of questions gently with people who are obviously traumatized, FEMA could start to identify new programs that could be implemented quickly.”

Dana explains that while the stats that showed how many people applied for housing, baby formula, tents and other needs were useful, they were still missing important context that showed what people needed where they were.

The field research led to 3 new programs—including basic cash assistance and support for transitional housing.

“The experience isn’t only the interaction that you have with that service on that day or in that episode. It starts way before and ends way after that slice. Looking at what happened before and what happened after, I think is really important. But you also need to do enough research to understand what’s happening in a particular situation, understand the range of people who could be sitting at a computer or at the kitchen table...”

We can imagine the context of use, but that isn’t the same as seeing it, hearing it, feeling it.

—Dana Chisnell

How to make research actionable

Dana shares some practical tips on how both researchers and program owners can use qualitative research to make meaningful change.

1. Include decision-makers in the discovery process

Don’t just present findings — involve decision-makers in gathering them. When program managers and leaders witness user struggles firsthand, they become advocates for change rather than skeptics of recommendations.

2. Focus on feasibility from the start

Understand the constraints, timelines, and tolerance for change at your organization before making recommendations. The best insights are worthless if they can’t be implemented.

3. Share recommendations thoughtfully

How you present findings matters as much as what you present. Recommendations shouldn’t be a surprise. “Pre-wire” insights throughout the research to partners, stakeholders, and leadership. Send materials in advance, acknowledge inherited challenges, and frame discoveries as opportunities rather than failures.

4. Maintain continuous relationships

Research shouldn’t end with recommendations. The most successful projects involve ongoing collaboration during implementation. This way, you can make sure that insights don’t get lost in translation or that a program can take action on lessons learned during implementation.

5. Connect insights to value

Quantify the impact whenever possible. Think about time saved for people on the administrative side — “giving time back” to them to focus on the mission and the objectives they were hired for is a gift not only to individuals, it’s a step toward more effective services. You may also be able to identify operational costs saved. For example, how many tons of paper no longer need to be printed and stored when you move services online? And how does this offset the cost of developing and maintaining a digital service?

Thanks so much to Dana for taking the time to chat with me about her inspiring experience in user research. Check out more of Dana’s work on her website.